Image Classification vs. Object Detection: Key Differences & How to Choose

Image classification and object detection are two popular types of computer vision that teams sometimes confuse. After all, classification does “detect” things like object detection does.

However, image classification and object detection each have precise meanings, and it’s often better to use one versus the other, depending on your use case. In this article, we’ll cover the differences between image classification and object detection and help you choose which makes the most sense for you.

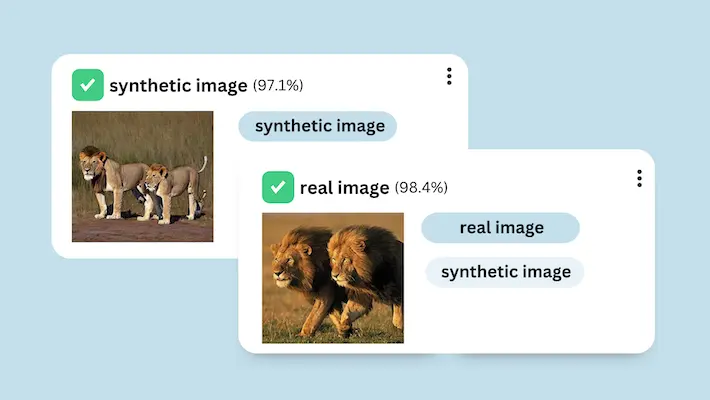

What is image classification?

The primary objective of image classification is to sort images into specific categories by labeling them based on their visual content. For example, Gardyn, which sells smart, indoor vertical hydroponic systems, uses image classification to quickly identify the health of its customers’ plants as either ‘healthy’ or ‘wilting.’

The first step to train an image classification model is to annotate a training dataset. For Gardyn, this means labeling at least ten images per label (‘healthy’ and ‘wilting’) and then feeding that dataset to an image classification model. The image classifier then learns how to differentiate between healthy and ailing plants by analyzing the distinct visual features of the images.

Once the model is complete, Gardyn automatically routes new photos of customer plants directly to the classifier for labeling. If a plant is wilting, it notifies the customer that it needs their attention.

There are three types of image classification:

- Binary classification: Tags images with one of two possible labels (e.g., dog or not dog)

- Multi-class classification: Tags images with one of many possible labels (e.g., dog, cat, bird, squirrel, rabbit, deer, coyote, fox, etc.). Each image fits into one category.

- Multi-label classification: Can tag images with multiple labels (e.g., black, white, brown, red, orange, yellow, etc.) Each image can fit into multiple categories.

What is object detection?

Object detection, on the other hand, identifies the location and number of specific objects in an image. Some businesses use object detection similar to how people use image classification: to detect if something is present in an image. While image classification is typically the preferred method for this, users sometimes prefer object detection when the target object is relatively small in the image.

Training an object detector is similar to training an image classifier. First, you must have an annotated training dataset. However, for object detection, you must annotate it by clicking the targeted object(s) center point or drawing a bounding box around it. This annotation process differs from image classification, which involves adding a label(s) to the whole image.

Most object detectors draw a bounding box around the detected objects; however, sometimes, detecting the center point of each object is sufficient and quicker. For many applications, center detection can give you all the information you need, allowing you to train and launch your model quicker than with bounding boxes. At Nyckel, we offer center detect and box detect.

How to choose: image classification vs. object detection

Here are some considerations around which to choose for your use case. Reach out at any time if you need help troubleshooting.

Choose image classification when:

- You want to classify or sort entire images into buckets.

- The location or number of objects in the image isn’t important.

Choose object detection when:

- You need to identify the location or count of an object.

- The object you want identified is just a small part of the image and/or your images are noisy (aka, there’s a lot going on beyond just the object).

Gardyn, the home gardening company mentioned above, might choose to use object detection for some of its models if the object they are trying to detect in the image is small in comparison to the rest of the image. See below for how Gardyn used object detection to detect strawberries in an image.

Model architectures for image classification and object detection

Which path you take will also influence what model architecture you should go with.

Image classification model architectures

Until recently, Convolutional Neural Networks (CNNs) were the state-of-the-art model architecture for image classification. However, Vision Transformers (ViT), such as Clip, have taken over as the gold standard.

Object detection model architectures

With object detection, though, CNNs are still the better choice, as they work better for bounding box detection. Currently the YOLO series from Ultralytics, which uses CNNs, is considered the most advanced object detection model.

Get started with your project

With Nyckel, you can build both image classification and object detection models in just minutes. Unlike other solutions, no machine learning knowledge is needed.

Sign up for a free account to get started, or reach out if you need help determining what’s best for your use case, be it image classification, center point object detection, or bounding box object detection.